Our Commitment to Responsible AI

At Interactions, we don’t just believe in the power of AI to improve customer experience, we believe it should be built on a foundation of trust. That means being transparent about not only how it works and how we use it, but also how we protect customer data, and give customers control while using it.

Behind that commitment is a team of passionate, principled experts dedicated to building AI that earns trust every step of the way.

Trust in AI Starts with People

Trust is built not just through innovation, but through the expertise, care, and accountability of the humans shaping it. That’s why people behind the AI matter more than ever. Clear governance, explainability, and accountability must be built from the start, not added later. But the call for transparency goes beyond any one company, it's essential to how AI moves forward with integrity.

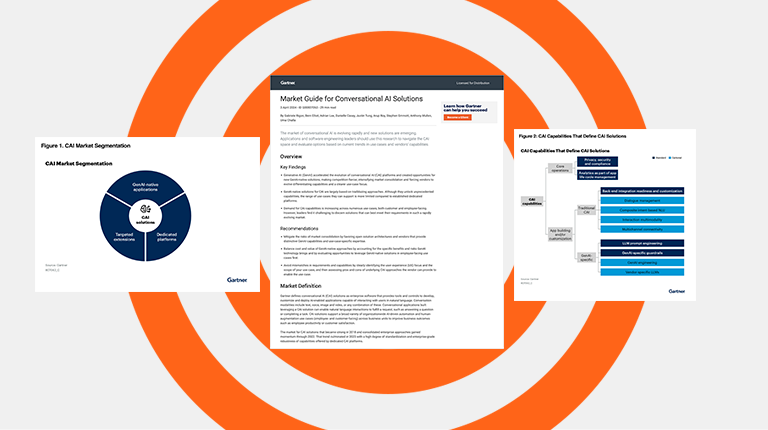

Go Behind the Scenes of Our AI Approach

Transparent AI you can understand and trust

We believe trust begins with clarity. That’s why we offer detailed disclosures about how our AI works, including model cards, data provenance, performance metrics, and the logic behind decisions. For Generative AI, we go a step further by providing explanations about how outputs are generated, what parameters are in play, and why certain results may vary. This level of visibility allows our customers to understand not just what our AI does, but how and why it behaves the way it does.

Security at the core of every AI solution

Security is foundational to every AI solution we build and deploy. Our development process adheres to secure-by-design principles, incorporating rigorous safeguards to prevent vulnerabilities such as input poisoning, data leakage, or unauthorized access. We maintain clear documentation, track performance metrics, and establish controls throughout the model lifecycle to ensure that each solution meets our strict internal standards and complies with industry best practices.

Ethics in practice, not just in principle

Responsible AI requires more than good intentions – it demands structure and accountability. We establish clear roles and responsibilities for everyone involved in the development and governance of our models. Our teams continuously monitor outputs, assess risk, and incorporate human oversight to ensure accuracy and fairness. We also evaluate and publish any measurable bias in our models to provide transparency into how they may impact different user groups.

AI that respects boundaries and protects privacy

Customer data is never used without clear permission and is always handled according to our legal agreements, applicable regulations, and ethical commitments. When necessary, we isolate model training environments to ensure that a customer’s data is used exclusively for their solution. Human oversight helps monitor usage and uphold our standards for privacy and responsible AI use. After training, and once data is abstracted into a model, it cannot be reverse-engineered, adding another layer of protection.

Meet the Minds Behind Our Responsible AI Movement

Resources to Get You Started

Consult an Expert

Let's Talk Responsible AI