Powering Intelligent and Personalized Applications

Our Speech and Language technology is a unified suite built on a single, flexible platform.

Stand out in the crowd

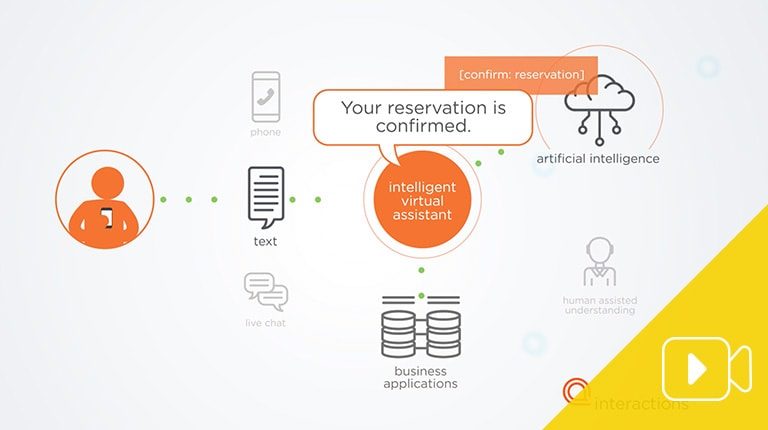

Interactions Speech and Language technology provides the state-of-the-art artificial intelligence technologies that power our Intelligent Virtual Assistant. Years of applied research in machine learning and deep neural networks positions our technology at the forefront of the industry.

Full Stack of AI Technologies

This flexible Platform is a unified technology suite providing Automatic Speech Recognition (ASR), Natural Language Processing (NLP), Dialog Management, and Voice Biometrics. That means we can handle conversations on any channel — through voice, text, chat or social — on this single platform.

Build Once, Deploy Often

The Interactions Platform is flexible, modular and scalable, powering a new generation of intelligent and personalized applications. Our platform makes it simple to combine services to optimize your applications. The common code and development tools allow the easy integration of several different modules to provide sophisticated and differentiated applications.

Machine Learning at Scale

Years of applied research in machine learning and deep neural networks positions our technology at the forefront of the industry. A host of machine learning tools provide automated learning that continuously improve the performance of all technologies.

We've got what it takes

Automatic Speech Recognition (ASR)

Interactions ASR technology uses uniquely generated acoustic models that predict how words sound in a given environment, such as when talking on a mobile phone. These acoustic models are combined with language models and pronunciations for exceptional accuracy. Interactions ASR solutions are adaptable to specific domains, environments, and languages. ASR takes spoken word and puts it into text, starting point for being acted on.

Dialog Management

Interactions Dialog Management technologies enable the rapid creation of interactive systems, supporting conversational dialogs on any service channel a customer uses to engage with a brand. The underlying dialog framework enables the interplay of different dialog management technologies, allowing the use of the best technology for the task at hand. Dialog Management enables free-flowing interactions, leveraging knowledge-based approaches that combine the ontology of a given vertical with business rules of a specific customer.

Natural Language Processing (NLP)

Interactions NLP turns data into information. By identifying the people, locations, topics, and intentions found in any segment speech or text, NLP provides the basis for actionable intelligence applications and business processes.

Voice Biometrics

Interactions Voice Biometrics uses the human voice to provide speaker recognition and authentication. Using the unique characteristics of each voice, voice biometrics is used to verify a speaker's claimed identity (verification) or identify a speaker from a known group of people (identification).